Study Grade

Study Grade is a feature that reviews and grades your study based on the study’s adherence to best practices. After deployment, the Study Grade job will run and execute a set of checks against your study’s study design. These checks will review definitions or groups of definitions against Veeva CDMS best practices and will highlight areas that impact study design, performance, and data extracts. You can view study grade records in the Study Grade tab.

Prerequisites

Users with the standard CDMS Study Designer study role can perform the actions described below by default. If your organization uses custom Study Roles, your role must grant the following permissions:

| Type | Permission Label | Controls |

|---|---|---|

| Standard Tab | Studio Tab | Ability to access the Studio tab |

| Standard Tab | Study Grade Tab | Ability to access the Study Grade tab |

| Functional Permission | View Study Grade | Ability to view Study Grade records |

If your Study contains restricted data, you must have the Restricted Data Access permission to view it.

Learn more about Study Roles.

Note that the intent of this set of checks is purely to evaluate and provide suggestions (much like the Warnings in Validations) for current or future study design changes. It is understood that, due to the complexity and variety of study designs, some choices must be made to deviate from best practices, which result in a lower overall grade. For these reasons, this feature is specifically not designed to measure or evaluate a person’s performance and may not be used as such.

Using Study Grade

Study Grade automatically runs on your study after you successfully deploy from DEV to TST. You can also manually run the Study Grade report from the Actions menu in Studio. After deployment, Vault runs a job to evaluate the Study Design, the results of which are logged and formatted in an Excel™ file. When the job completes, a Study Grade record, which contains an overall quantitative score as well as detailed scores for each check, will be created in the Study Grade tab.

Once your study receives a quantitative score, Veeva CDMS Services will review your results and determine what changes should be made to improve your study design. These suggestions, along with a qualitative score, will be logged in the Study Grade Review subtab of the Study Grade record.

After your study has been reviewed and graded, you can work with your Veeva Services reviewer to determine your next steps.

Manually Running the Study Grade Report

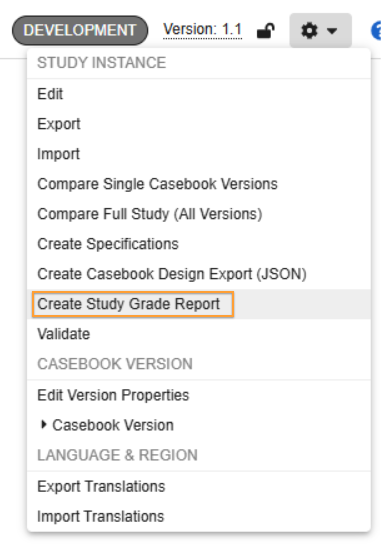

You can manually run the Study Grade report in the Study Settings tab in Studio.

To run the Study Grade report:

- Navigate to Studio > Study Settings.

-

Click on the Actions menu and select Create Study Grade Report.

- Click Run Now.

Vault runs the Study Grade report and emails you when the report is complete.

How to View your Study Grade and Study Grade Review

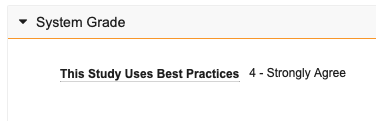

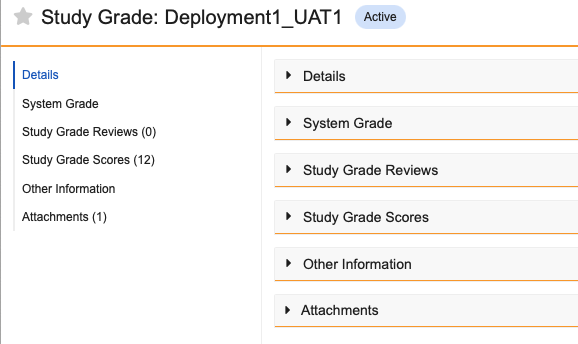

Each Study Grade record contains the following subtabs: Details, System Grade, Study Grade Reviews, Study Grade Scores, Other Information, and Attachments.

- Details: This section contains study information like the Name, Study, Study Version and Build, and the Last Run, or the last time the record was updated by the system.

- System Grade: This section contains the quantitative score for your study that is determined by calculating a weighted average of the Study Grade scores. This score is displayed in the This Study Uses Best Practices field.

- Study Grade Reviews: This section contains your Study Grade Reviews - a qualitative score and an evaluation written by a Veeva Services reviewer.

- Study Grade Scores: This section details the list of checks that were run against your study environment and the corresponding scores.

- Other Information: This section provides basic information about the Study Grade record such as Created Date and Last Modified Date.

- Attachments: This section contains your Study Report Card in the form of an Excel™ file.

Understanding your Study Grade

Vault provides two scores (0-4) as part of your Study Grade: one on a check-by-check basis where the records that are subject to that check are rated and scored, and one as an overall calculated score that is based on a weighted calculation of the individual check scores.

The intention of these scores is to provide a summary of the checks from the system’s perspective. While these scores are standardized, study design variability and requirements may require deviations, which is why we have a qualitative review process.

The following table provides a general overview of the Study Grade scoring system:

| Score | Explanation |

|---|---|

| 0 - N/A | The design was not applicable for evaluation in this context. This score may occur when the system cannot determine the relevance of the design to the specified criteria, or when the aspect being measured does not apply to this specific record. As a result, no assessment was made.* |

| 1 - Strongly Disagree | The design deviates significantly from the expectations of the system and falls well below the standards for performance or usability, indicating a substantial gap in alignment. |

| 2 - Disagree | The design demonstrates some alignment with the expected design criteria, but still does not meet key expectations for performance or usability. There may be a few aspects that work, but the overall design is insufficient and requires improvements to meet the system’s baseline standards. |

| 3 - Agree | The design aligns well with the expected criteria. Performance and usability are in line with the system’s expectations, and the design meets the requirements. There may be minor opportunities for improvement, but the record is generally functional and efficient. |

| 4 - Strongly Agree | The design meets the system’s expectations for performance and usability and represents an ideal alignment with the criteria. |

*Vault will skip the evaluation of records when:

- They aren’t used in the schedule

- They are rule-related and fit the following criteria:

- One or more identifiers don’t reconcile to definitions in use

- They are inactive and/or archived

Checks and How to Resolve Them

The following table details possible Study Grade checks and how to resolve any errors that are logged against them:

| Name | Label | How to Resolve |

|---|---|---|

| codelist_consistency | Codelists are consistently formatted | Ensure that the formatting (capitalization and punctuation) matches between different codelist codes and decodes for Codelists and Codelist Items. |

| codelist_display | Codelists are displayed appropriately for the number of items in the codelist | Best practices recommend that codelists with less than 4 items should display as radio buttons and codelists with more than 6 items should display as a picklist. Codelists are often best represented by radio buttons for better visibility, however, having too many options impacts the way the form is displayed and consumed by the site. |

| event_group_repeating | Repeating Event Groups are used where they can be | Review events that have similar forms and, if possible, create repeating event groups with these events. |

| event_group_usage | Event Groups have the appropriate utilization | Review your study's use of Event Groups: are you overusing (do you have an Event Group for every Event?) or underutilizing (do you only have a single Event Group?) Event Groups? Note that in some cases, a single Event Group may make sense for your study. |

| form_order | Forms reused in multiple events are in the same relative order | If you've received a check related to Form Order, you're reusing forms in multiple events and some of those forms are in different orders (in relation to each other). To resolve this, look across the events where you've reused forms and organize them so that they are in the same relative order in the different events they are used in. Because the check can't determine the correct order, you must determine which order is correct and model your forms accordingly. |

| form_total_items | Forms display the right number of items | This check is looking for situations where you are potentially displaying too many items on one form. This could be caused by: a defaulted repeating item group that produces a large number of rows, a repeating item group with a large number of items in the item group, or an aggressively large value in the repeat maximum for the repeating item group. To resolve this, review the item group against dataset requirements to ensure all data needs to be collected in this dataset, ensure that the defaults are only the expected defaults, and update repeat max values to a reasonable amount. We realize that there may be cases where these updates can't occur due to study requirements and ask that you be aware that there could be performance implications in EDC when forms get too large. |

| form_unnecessary_copy | Unnecessary copies of forms are avoided | Reuse Items, Item Groups, and Forms when possible. There are some cases where this issue is unavoidable. |

| rule_blank_checks | Rules check for null and blank values | Ensure that rules leverage IsBlank() for text, date, and number identifiers where applicable. |

| rule_complexity | Rules are as complex as necessary | There are many cases where complex rules should be written to reduce the overall number of rules, however, there are also situations where the permutations of these rules could result in performance issues. In these cases, some of these rules may need to be split up in order to reduce the number of possible permutations in a single rule. To resolve this issue, review non-fully qualified identifiers or actions and repeating objects to see if there is a possibility to reduce the checks or to split some of these into different rules. |

| rule_dynamics_results | The dynamic rule actions leverage Global appropriately | Change the Dynamic Action Scope to match the target of the action and the Event Group. If the scope is Global and there is a fully qualified action identifier and the Event Group is repeating, one of the values need to change. Use @Event in the action identifier or change the Scope to something other than Global. |

| rule_qualified_identifiers | Rules use @ identifiers on repeating objects | Rules that go against repeating definitions (Event Group, Form, Item Group) should either not be qualified and have a matching scope of Event or Event Group or not be qualified and have a global scope. |

| rule_scope | Rules are scoped appropriately for the action | The target of the action and the identifiers used in the expression either have to be all fully qualified or not all fully qualified. Update the action or the identifiers to match the use of @Form, @Event, etc. |